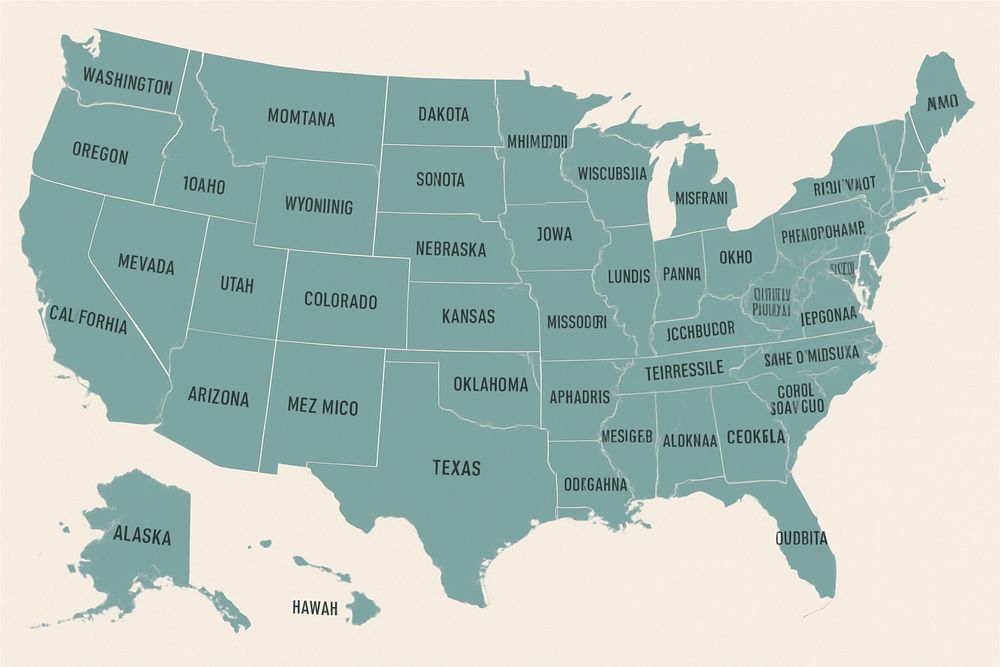

The Register and CNN recently published articles criticizing GPT-5 for producing incorrect or humorous images of U.S. presidents and maps, implying this reflects a lack of basic knowledge. In reality, these errors highlight the challenges of image generation, not gaps in subject understanding.

The recent CNN piece on GPT-5 suggests that because the model mislabels a map or produces a distorted image of U.S. presidents, it lacks fundamental knowledge about geography or history. That conclusion overlooks a key distinction between knowledge representation and image rendering.

If you ask GPT to list all 50 U.S. states, it will do so accurately. If you give it an actual map to analyze, it can identify each state and its location with precision. The difficulty arises when converting that knowledge into a graphical output.

Text generation is based on predicting the next token in a sequence, using vast amounts of language data. Image generation, by contrast, involves creating pixel patterns that visually convey a concept. A model may “know” where Vermont is on a map, but turning that into a perfect border outline in an image file is a separate skill — and one that current systems still struggle with.

To draw an analogy: asking GPT to produce an accurate visual depiction of every U.S. president is like asking a historian with a PhD in American history to sketch all those portraits from memory. The results may be comical, but that does not indicate ignorance of who those presidents are or when they served.

Evaluating an AI’s knowledge based solely on the quality of its drawings misrepresents its capabilities. Poor visual outputs are a limitation of current image-generation techniques, not evidence of missing subject knowledge.

Original Register article for reference

To make this clearer, try the live demo below—you’ll see how the text-based version gets it right, while the image version may humorously miss.